There are various libraries for us to summarize a text using AI. In this tutorial, we will discover Hugging Face Transformers and its models to summarize text in Python.

This tutorial will focus on the hands-on part of AI text summarization, if you are looking for a tutorial to build an AI model using Neural Networks, please comment below.

Contents

- What is Hugging Face Transformers?

- Is Hugging Face Transformers API Free?

- Text Summarization in Python with Flan T5 Transformers Model

- Text Summarization in Python with T5 One Line Transformers Model

- Text Summarization in Python with BART Large CNN Transformers Model

- Conclusion

What is Hugging Face Transformers?

Hugging Face Transformers is a Python library that provides many state-of-the-art NLP models such as BERT, GPT-2, T5, etc.

I have been using this library for over 2 years and till now, it is still a great library for testing NLP models.

Using those models, we can perform many NLP tasks such as text classification, text generation, text summarization, etc.

We will discover such models in this tutorial.

Is Hugging Face Transformers API Free?

The API is free but if you want to use unlimited calls, Hugging Face will charge you $9 a month for the subscription.

Hugging Face also provides a dedicated API for custom use which costs more, but in the scope of this tutorial, we won't need to pay anything.

Text Summarization in Python with Flan T5 Transformers Model

For the text summarization task, we can use the pre-trained Flan T5 model for inference.

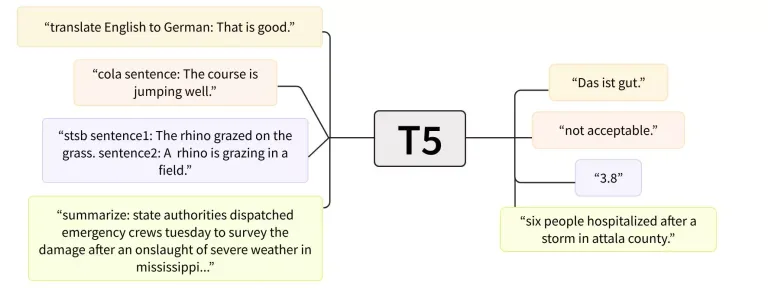

Flan T5 is a variant of T5 model which uses the Sequence-to-Sequence (Seq2Seq) architecture. If you want to dive more into this, check out the slides from UT Austin about Seq2Seq.

Before using the library, you will need to install it:

pip install transformers

Then follow the code below.

from transformers import pipeline text = """ dminhvu.com is a programming-focused blog created by two students in Vietnam. \ With deep experience in Python and JavaScript, we will write intensive tutorials around those. \ Elastic Stack is also a main field we are working on. At dminhvu.com, we create content about programming and tech. \ You may find coding-related articles, tutorials, and more. We hope our content will help you in your journey. """ summarizer = pipeline("summarization", model="marianna13/flan-t5-base-summarization") print(summarizer(text, min_length=40, max_length=50)) # Output: # [{'summary_text': '"dminhvu.com is a programming-focused blog created by two Vietnamese students with deep experience in Python and JavaScript. \ # They are also working on Elastic Stack. They create content about programming and tech, with articles, tutorial.'}]

You can change the model parameter to use other models such as facebook/bart-large-cnn or google/pegasus-large. The list of models can be found here.

Also, the min_length and max_length parameters can be changed to the expected length of the summary.

In this case, I will set min_length = 40 and max_length = 50 to get a summary with 40 to 50 words, which is shorter than the original sentence.

Text Summarization in Python with T5 One Line Transformers Model

Another T5-based model that can be used for summarization tasks is T5 One Line (t5-one-line-summary). You can visit the original page here.

from transformers import pipeline text = """ dminhvu.com is a programming-focused blog created by two students in Vietnam. \ With deep experience in Python and JavaScript, we will write intensive tutorials around those. \ Elastic Stack is also a main field we are working on. At dminhvu.com, we create content about programming and tech. \ You may find coding-related articles, tutorials, and more. We hope our content will help you in your journey. """ text_derived = """ Text: dminhvu.com is a programming-focused blog created by two students in Vietnam. \ With deep experience in Python and JavaScript, we will write intensive tutorials around those. \ Elastic Stack is also a main field we are working on. At dminhvu.com, we create content about programming and tech. \ You may find coding-related articles, tutorials, and more. We hope our content will help you in your journey. \ Summarization: """ summarizer = pipeline("text2text-generation", model="snrspeaks/t5-one-line-summary") print(summarizer(text_derived, max_length=128)) # Output: # [{'generated_text': 'dminhvu.com: A blog about coding and tech'}]

As its name, this model will generate a one-line summary of the input text.

Remember that, since this model is for text-to-text generation, we will need to inform the model that we want a summarization. So I added the Text: text before the input text and the Summarization: text after the input text.

Text Summarization in Python with BART Large CNN Transformers Model

BART Large CNN is a model that is pre-trained by Facebook. It is a Seq2Seq model that can be used for text summarization.

In this case, we will use a derived model from BART Large CNN, which is Qiliang/bart-large-cnn-samsum-ChatGPT_v3.

from transformers import pipeline text = """ dminhvu.com is a programming-focused blog created by two students in Vietnam. \ With deep experience in Python and JavaScript, we will write intensive tutorials around those. \ Elastic Stack is also a main field we are working on. At dminhvu.com, we create content about programming and tech. \ You may find coding-related articles, tutorials, and more. We hope our content will help you in your journey. """ text_derived = """ Text: dminhvu.com is a programming-focused blog created by two students in Vietnam. \ With deep experience in Python and JavaScript, we will write intensive tutorials around those. \ Elastic Stack is also a main field we are working on. At dminhvu.com, we create content about programming and tech. \ You may find coding-related articles, tutorials, and more. We hope our content will help you in your journey. \ Summarization: """ summarizer = pipeline("text2text-generation", model="Qiliang/bart-large-cnn-samsum-ChatGPT_v3") print(summarizer(text_derived, max_length=128)) # Output: # [{'generated_text': 'The dminhvu.com is a programming-focused blog created by two students in Vietnam. \ # They have experience in Python and JavaScript and are working on Elastic Stack. They create content about programming and tech, including articles, tutorials, and more. \ # They hope their content will help you in your journey.'}]

This summarization seems to be the most "human" and more meaningful than the others.

Conclusion

That's all on how to summarize the text in Python using the Hugging Face Transformers library.

You can discover more models on the Hugging Face Models page.

Comments

Be the first to comment!